7 avril 2025

DotJS 2025: focus on the future of JavaScript and AI

7 minutes de lecture

DotJS 2025

The DotJS 2025 conference took place in the legendary Folies Bergère theatre in Paris. We were there and are here to share everything about this 2025 edition, marked by a special anniversary: the tenth edition 🎉. As you will see, the topics were more interesting than ever, and there were even a few surprises to end the day.

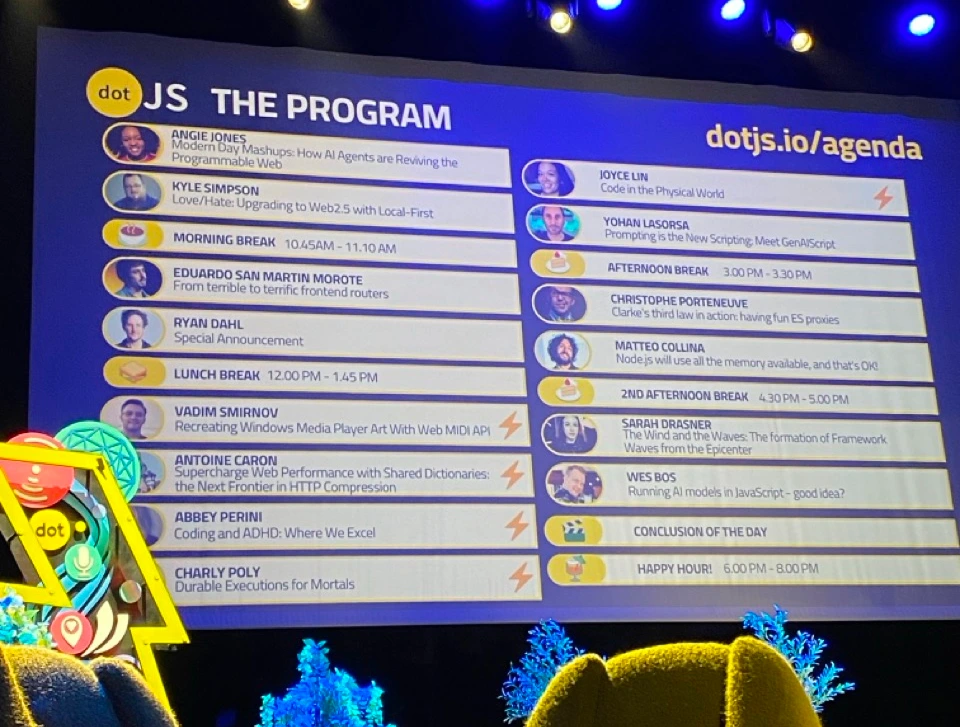

On the agenda

How AI Agents are Reviving the Programmable Web

Angie Jones takes us back to the era of mashups, when developers cobbled together apps by combining several services (e.g., Google Maps + Craigslist). This era gave birth to the API economy... before it began to lose steam due to maintenance and compatibility challenges.

Today, she sees a resurgence of this creativity, thanks to AI agents and the Model Context Protocol (MCP): an open standard that allows AI agents to communicate with APIs in a straight-forward way, via wrappers called MCP servers. The result: complex apps can now be created in natural language without having to code each integration by hand.

💡 Key takeaways from the talk

- 🧠 MCP: a standard protocol for connecting AI agents to APIs

- 🧰 More than 3,000 MCP servers are already available

- ⚙️ Compatible with all LLMs and agents (open source or not)

- ⚡️ Enables the creation of modern "mashups" in just a few prompts (example: a site from a Figma design)

- 💥 The programmable web is back, but this time with AI agents to automate compatibility

Love/Hate: Upgrading to Web2.5 with Local-First

Kyle Simpson criticizes false oppositions (cloud vs local, native JS vs framework, JS vs TS…) that stifle progress. According to him, Web 2.0 is collapsing, undermined by:

- the loss of user control over their data

- the increasing complexity of web apps

- illusory performance (the "zero install" is a lie)

- disproportionate costs for both developers and users

- a cloud dependence that excludes a large part of the world population

To build a fairer, more sustainable, and accessible web, he suggests a transition to "Web 2.5" via the Local-First movement. He urges us to reconsider how we develop our apps and how we present the web to users.

💡 Key takeaways from the talk

- 📁 Local-First: data is primarily stored on the user's device and synced as needed

- 🔐 Identity can be managed locally, without a server, thanks to biometric passkeys and public keys

- 🌍 Local-First enables a more resilient, economical, offline-friendly, and sovereign web

- 🧩 Local-First apps rely on open standards (PWA, IWA, periodic syncing, peer-to-peer, etc.)

- 🔄 Web 2.5 is a pragmatic bridge between the current web and a decentralized alternative, without waiting for Web3

From terrible to terrific frontend routers

Yohan traces the evolution of frontend routers, from hacks based on hashchange in Internet Explorer to the promising future of the Navigation API, a new native browser API.

Today, JS frameworks (React, Vue, etc.) have to reinvent navigation mechanisms due to the limits of the History API: poorly designed API, unexpressive events, lack of automatic scroll/focus, unnecessary complexity…

💡 Key takeaways from the talk

- 🔄 The Navigation API allows you to intercept all navigations (clicks, forms, pushState, etc.)

- ⚙️ It offers a real native alternative to JS routers in frameworks

- 💡 Scroll, focus, transitions, history, everything is natively and properly managed

- 🌍 It's standardized (HTML spec), supported by Interop 2025, and Firefox/Safari have plans to implement it

- 🧪 Not widely available in production yet, but already testable in Chrome

Special announcement (Deno + OpenTelemetry)

Ryan Dahl (creator of Node.js and Deno) announces that Deno 2.2 natively integrates OpenTelemetry, a standard for observability (logs, traces, metrics). This allows for the correlation of logs, traces, and errors automatically, without changing the code, even in a Node.js project run with Deno.

With a simple flag (--unstable-hotel) and an environment variable, each HTTP request receives a trace ID attached to its logs, making production debugging much more readable and reliable.

Deno takes advantage of a recent improvement in V8 (Continuation Local Storage) to efficiently make this possible and will also support the standard AsyncContext API currently being standardized (Stage 2 TC39).

💡 Key takeaways from the talk

- 🦕 Deno 2.2 natively integrates OpenTelemetry

- ⚙️ Just add a flag to get correlated traces, logs, and metrics

- 🔍 No need to guess which logs belong to which request (even asynchronous)

- 🌐 Also works with Node.js apps launched via Deno

- 📈 Can send data to Grafana, Datadog, etc.

- 🧪 Based on standards in progress (AsyncContext), without any magic

Clarke's Third Law in Action: Having Fun with ES Proxies

For the duration of his talk, Christophe takes off his lecturer's cap and puts on his speaker's hat to delve into the possibilities offered by ES Proxies, an underutilized JavaScript feature that's been available for 10 years.

JS proxies allow you to dynamically intercept all interactions with an object (reading, writing, calling, etc.) via "traps". It's a powerful form of meta-programming that paves the way for useful, fun, or just plain WTF uses.

💡 Key takeaways from the talk

- 🧩 ES Proxies allow you to dynamically manipulate the behavior of JS objects

- 🚀 They form the basis of Vue 3, Immer, MobX, and many other modern libraries

- 🎯 Use cases: runtime validation, observability, immutability, custom DSL, etc.

- ⚡️ Highly performant, with very little cost compared to Object.defineProperty

- ✅ Native support in all modern environments

Node.js will use all the available memory, and that's OK!

Matteo debunks a common misconception: no, your Node.js app does not necessarily have a memory leak just because it's using all the RAM. In fact, this is the normal behavior of V8's garbage collector (GC).

Node reserves memory (RSS) and doesn't immediately release it even when it's no longer in use because the GC knows future allocations are coming. The real indicator to monitor is the ratio between heapUsed and heapTotal, not just raw memory consumption.

By adjusting the size of the "young generation" using V8 flags (--max-semi-space-size), you can reduce latency and increase throughput, especially in SSR apps (React, etc.), which generate lots of temporary objects.

💡 Key takeaways from the talk

- 💥 Don't kill your Node app if it's using 80+% of RAM: it's normal.

- 🔍 Monitor heapUsed / heapTotal rather than just RSS.

- ⚙️ Fine-tune V8 flags to adjust memory space sizes (

max-semi-space-sizeand--max-old-space-size) - 🚀 Increasing the young gen parameters allows for more volatile objects to stay in the faster memory.

- 📊 The open-source Vat tool makes it easy to monitor your multi-threaded Node apps.

The Wind and the Waves

Innovation in frameworks is never isolated: Angular, React, Vue, Solid, Svelte… all draw inspiration from each other. Angular is an example of a wave shaped by many winds (influences).

She then presents Angular's latest features:

- Signals: a new reactivity system inspired by Solid, simpler and more efficient than RxJS.

- Lazy loading + progressive hydration: on-demand loading with @defer, inspired by React Suspense, Astro, Quick.

- Migration tools: ng update + T-surge (global analysis, experimental).

- DX improvement: simpler syntax, new interactive documentation.

💡 Key takeaways from the talk

- 🔄 Frameworks evolve together, not in isolation.

- 🧠 Angular adopts Signals, already used in production at Google (Wiz, YouTube…).

- ⚡ The integrated lazy + hydration results in major performance gains.

- 🛠 Angular offers enterprise-scale migration tools.

- ✨ The development experience is greatly improving.

Running AI models in JavaScript: good idea?

Wes Bos shares his enthusiasm for AI models executed in JavaScript, especially in the browser thanks to advances like WebGPU. The idea: avoid API/cloud round trips or the hassle of Python, and do everything locally, quickly, and at a lower cost.

He shows that, even though the majority of models are designed for Python, one can already do a lot of useful, fun, or creative things in JS: pose recognition, background removal, voice transcription, voice generation, translation, sentiment analysis, etc.

Explicit references to Kyle Simpson's talk on Local-First earlier in the day are noteworthy.

💡 Key takeaways from the talk

- 🚀 Fast local execution thanks to WebGPU: less latency, more responsiveness.

- 🔒 Privacy and cost: no server needed, no API to pay for, data stays on the device.

- 💡 Improved user experience: AI directly in the UI (translation, voice generation, etc.).

- 🌐 JavaScript ecosystem is expanding: transformers.js, MediaPipe, ONNX, WebLLM, etc.

- 🚨 Known limitations: model size, varying device performance, uneven WebGPU support.

Wrap-up

As usual, the conference was a success: interesting topics, high-quality speakers, and flawless organization. And... You probably noticed, AI-related subjects have become very plentiful and popular, good thing the DotConferences team noticed as well and invite you to the DotAI 2025 conference, especially focused on AI.

If you were not fortunate enough to attend the conference this year, we highly recommend you come next year. In the meantime, feel free to watch the replays on their YouTube channel, and check out the talk sketches made by @AmelieBenoit33.

See you next year 👋